After upgrading my k8s cluster, all the jobs of Kubeflow Pipelines will only finish the first operation and hang there. The reason is a bug in Argo (Kubeflow is based on Argo). And the most simple and straightforward solution is: relaunch the k8s cluster with a lower version. In my situation, the 1.18.20 works very well.

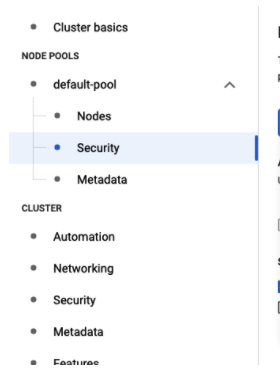

Furthermore, to let tasks in Kubeflow PIpelines run BigQuery job in GCP, we need to set security of the node pool.

As above, choose a specific service account that could access BigQuery resources instead of the default computing engine account.

Therefore, for running Kubeflow Pipelines successfully, we need to launch a k8s cluster with the following rules:

- Use lower version,

1.18.20etc. - Set service account for desired resources to node pools